Diffusion Self-Guidance for Controllable Image Generation

draw_abstract

Abstract

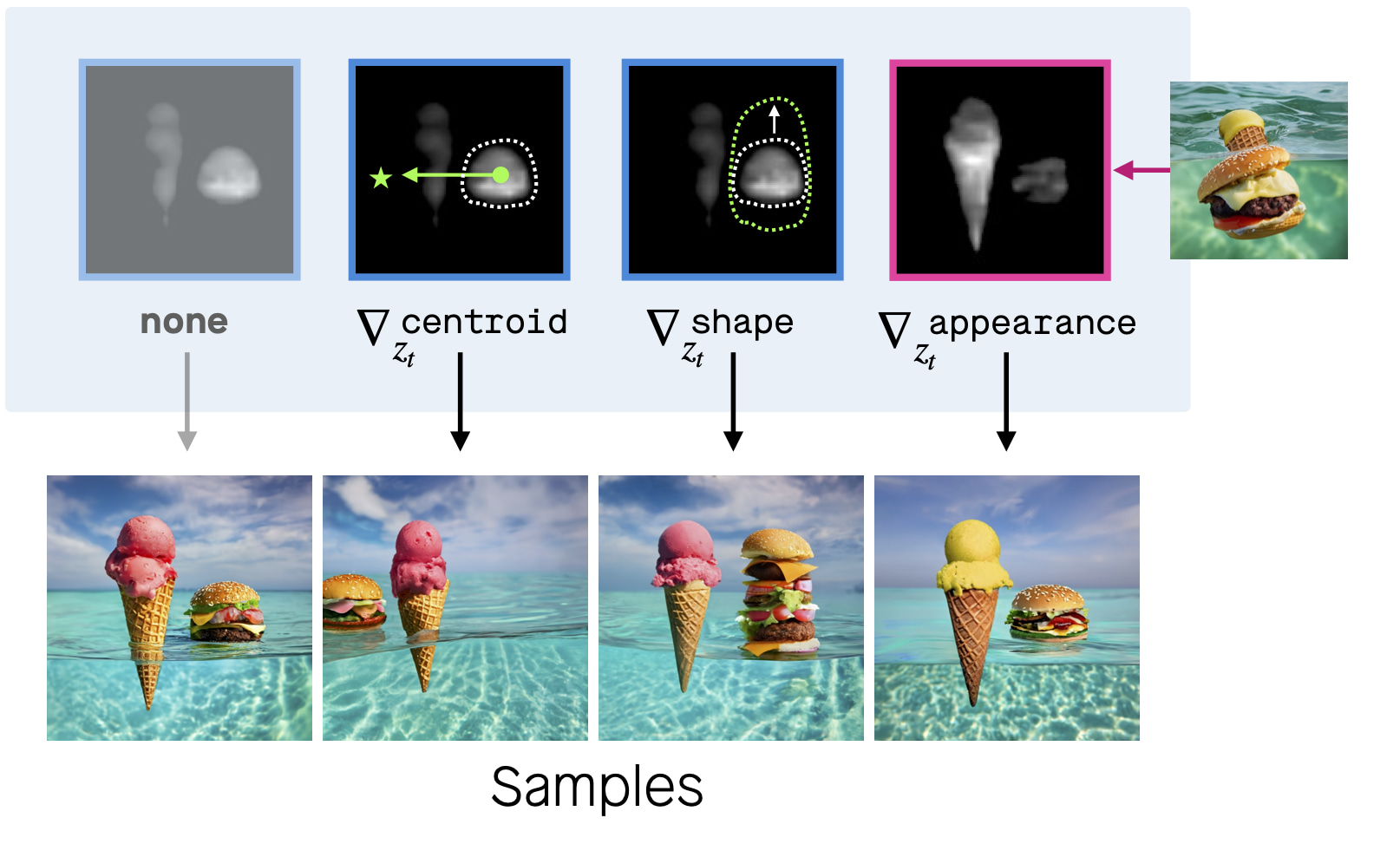

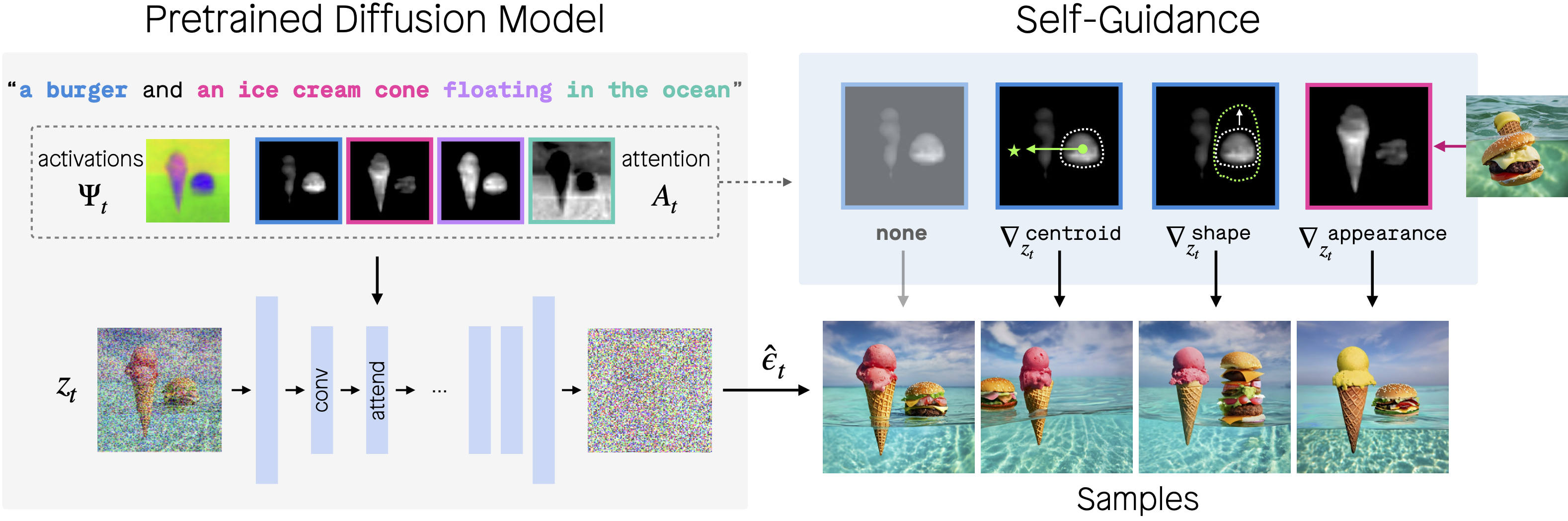

Large-scale generative models are capable of producing high-quality images from detailed text descriptions. However, many aspects of an image are difficult or impossible to convey through text. We introduce self-guidance, a method that provides greater control over generated images by guiding the internal representations of diffusion models. We demonstrate that properties such as the shape, location, and appearance of objects can be extracted from these representations and used to steer sampling.

Self-guidance works similarly to classifier guidance, but uses signals present in the pretrained model itself, requiring no additional models or training. We show how a simple set of properties can be composed to perform challenging image manipulations, such as modifying the position or size of objects, merging the appearance of objects in one image with the layout of another, composing objects from many images into one, and more. We also show that self-guidance can be used to edit real images.

palette Results

open_with Move and resize objects

Using self-guidance to only change the properties of one object, we can move or resize that object without modifying the rest of the image. Pick a prompt and an edit and explore for yourself.

“a raccoon

in a

barrel going

down a waterfall”“distant

shot of the

tokyo tower with a massive sun in the sky”

“a fluffy cat

sitting on a museum bench

looking at an oil painting of cheese”move ↑move ↓move ←move →shrinkenlarge

open_with Appearance transfer from real images

By guiding the appearance of a generated object to match that of one in a real image, we can create scenes depicting an object from real life, similarly to DreamBooth, but without any fine-tuning and only using one image.

“a photo of a chow chow wearing a

... outfit”“a DSLR photo

of a teapot...”“purple wizard”“chef”“superman”

open_with Real image editing

Our method also enables the spatial manipulation of objects in real images.

“an

eclair and a shot of espresso”

“a hot

dog, fries, and a soda on a solid background”

shrink widthreconstructmoveenlargerestyle

open_with Sample new appearances

By guiding object shapes toward reconstruction of an image's layout, we can sample new appearances for a given scene. We compare to ControlNet v1.1-Depth and Prompt-to-Prompt. Switch between the different styles below.

“a

bear wearing a suit eating his birthday cake out of the fridge in a dark kitchen”“a parrot riding

a

horse down a city street”appearance 1appearance 2appearance 3controlnetprompt-to-prompt

open_with Mix-and-match

By guiding samples to take object shapes from one image and appearance from another, we can rearrange images into layouts from other scenes. We can also sample new layouts of a scene by only guiding appearance. Find your favorite combination below.

#1#2#3#4#1#2#3#4random #1random #2“a suitcase, a bowling ball, and a phone

washed up on a

beach after a shipwreck”

open_with Compositional generation

A new scene can be created by collaging individual objects from different images (the first three columns here). Alternatively — e.g., if objects cannot be combined at their original locations due to incompatibilities in these images' layouts (*as in the bottom row) — we can borrow only their appearance, and specify layout with a new image to produce a composition (last two columns).

“a picnic blanket, a fruit tree, and a car by the

lake”

blankettreecar

blankettreecar“a top-down photo of a tea kettle, a bowl of fruit, and a cup of

matcha”

matchakettlefruit

matchakettlefruit“a dog wearing a knit sweater and a baseball cap drinking a

cocktail”

sweatercocktailcap

sweatercocktailcapopen_with Manipulating non-objects

The properties of any word in the input prompt can be manipulated, not only nouns. Here, we show examples of relocating adjectives and verbs. The last example shows a case in which additional self-guidance can correct improper attribute binding.

laughing right“a cat and a monkey laughing on a road”

messy location“a messy room”

(0.3,0.6)(0.8,0.8)red to jacket, yellow to shoes“green hat, blue book, yellow shoes, red jacket”

“a cat and a monkey laughing on a road”“a messy room”“green hat, blue book, yellow shoes, red jacket”

(0.3,0.6)(0.8,0.8)laughing rightmessy locationred to jacket, yellow to shoesopen_with Limitations

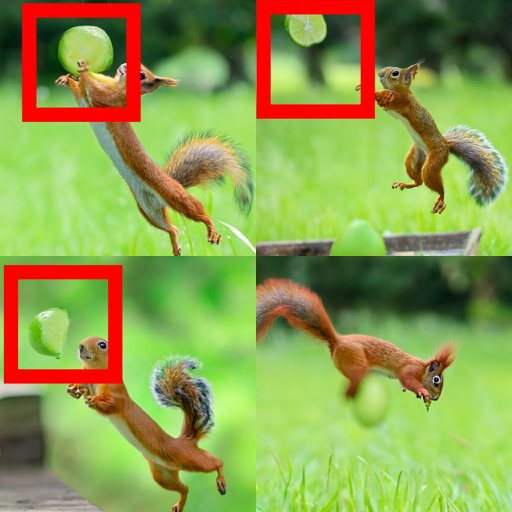

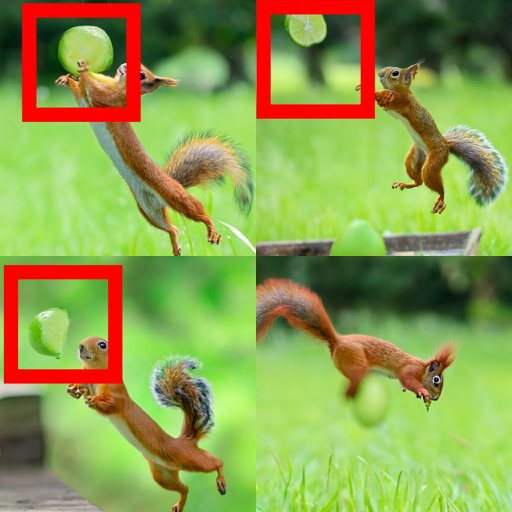

Setting high guidance weights for appearance terms tends to introduce unwanted leakage of object position. Similarly, while heavily guiding the shape of one word matches that object’s layout as expected, high guidance on all token shapes leaks appearance information. Finally, in some cases, objects are entangled in attention space, making it difficult to control them independently.

“a squirrel trying to catch a lime mid-air”.jpg)

lime guided“a picture of a cake”

“a potato sitting on a couch with a bowl of popcorn watching

football”

→“a squirrel trying to catch a lime mid-air”“a picture of a cake”“a potato sitting on a couch with a bowl of popcorn watching football”

.jpg)

lime guided→format_quote Citation

@article{epstein2023selfguidance,

title={Diffusion Self-Guidance for Controllable Image Generation},

author={Epstein, Dave and Jabri, Allan and Poole, Ben and Efros, Alexei A. and Holynski, Aleksander},

booktitle={Advances in Neural Information Processing Systems},

year={2023}

}

Acknowledgements

We thank Oliver Wang, Jason Baldridge, Lucy Chai, and Minyoung Huh for their helpful comments. Dave is supported by the PD Soros Fellowship. Dave and Allan conducted part of this research at Google, with additional funding provided by DARPA MCS and ONR MURI.

.jpg)